Babbli

2024 - Raspberry Pi, microphone, speaker, cardboard, screen, OpenAI API 50 x 50 x 30 cm

Babbli is an interactive storytelling toy powered by a large language model that creates dynamic, bilingual stories based on the child’s voice input. Designed to support second language acquisition, Babbli is an adventure companion that accompanies the child through endless stories. The story begins by asking the child what they’d like it to be about, generating an introduction read in two languages. From there, it continues the adventure by asking follow-up questions in both languages, encouraging the child’s input to shape the narrative. The child’s responses—whether in their native or second language—guide the story, allowing for endless imaginative possibilities.

Using the power of the GPT API, natural-sounding voices from ElevenLabs, and a playful mouth animation on an LED screen powered by Arduino, Babbli turns every story into an engaging experience. It speaks through its own little speaker, bringing characters and tales to life. Stories are one of the oldest forms of human communication and they have also been proven effective as a tool in language development in both first and second language learning. Enhancing preschoolers’ reading, speaking, and listening abilities (Isbell et al., 2004).

Creative Process

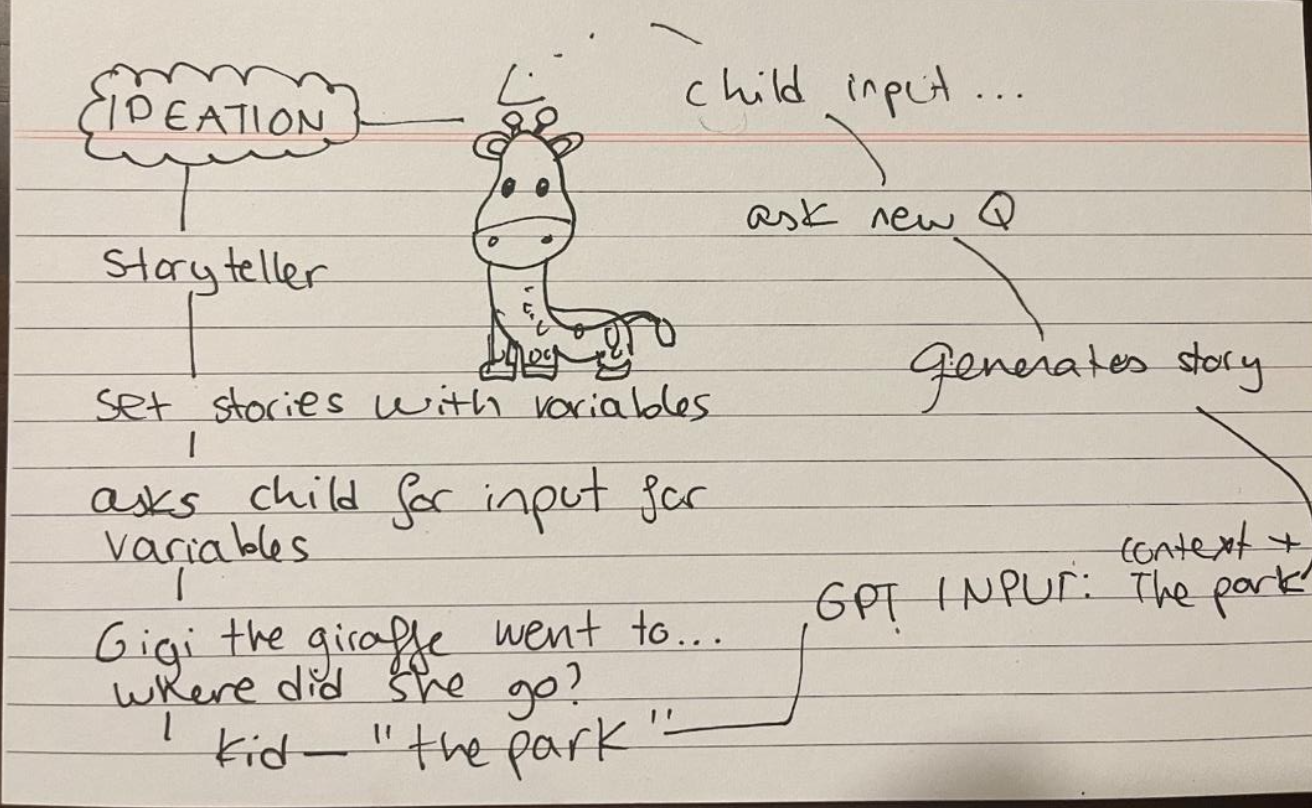

The ideation phase for Babbli began with brainstorming sessions and sketching potential designs. The primary objective was to create an engaging character that children could interact with, creating a sense of adventure and personal connection. After considering various names and character concepts, I decided on "Babbli," inspired by the Tower of Babel and the playful nature of children's babble when they are learning language

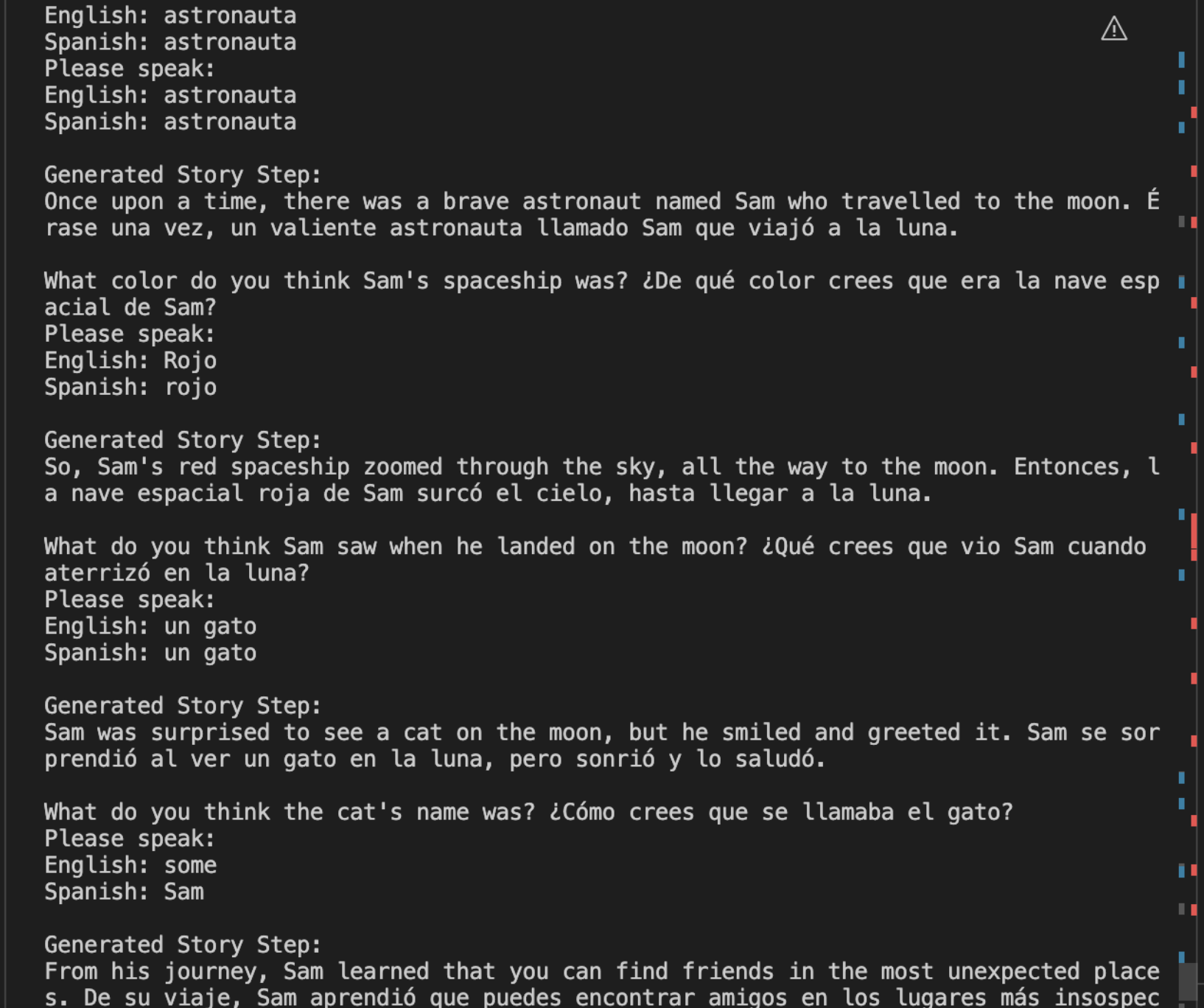

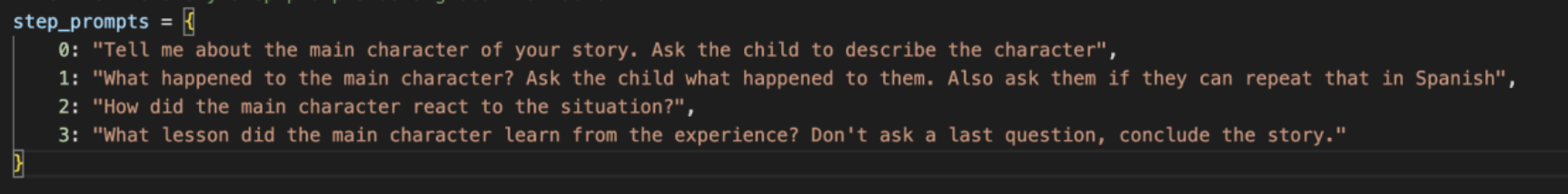

The first step in creating Babbli was building a simple storytelling prototype in Python. The goal was to convert speech to text, then use a GPT model to generate stories from a single word. Setting up speech recognition proved difficult, especially with installing PyAudio. After much troubleshooting, I fixed the issue by switching from Anaconda to a standard Python environment, which allowed the necessary packages to install. Once speech-to-text was working, I integrated the GPT model. The first version could generate short English and Spanish stories from a child’s word—for example, “Chicken”—and end with a question to spark interaction. The GPT prompt was designed to make stories age-appropriate, engaging, and educational. It instructed the model to act as a storyteller for kids under 8, tell short three-sentence adventures in both English and Spanish, and end with a follow-up question. This version showed Babbli’s core concept and guided improvements. Based on testing and feedback, I expanded language support beyond English and Spanish to include French and Russian, boosting its educational value and appeal.

I refined Babbli’s storytelling with dynamic expansion, where follow-up questions after each segment let the story evolve from the child’s responses. This interactivity kept children engaged and made the experience more immersive. To ensure coherence during infinite story generation, I structured the process into steps that adapted to the number of interactions.

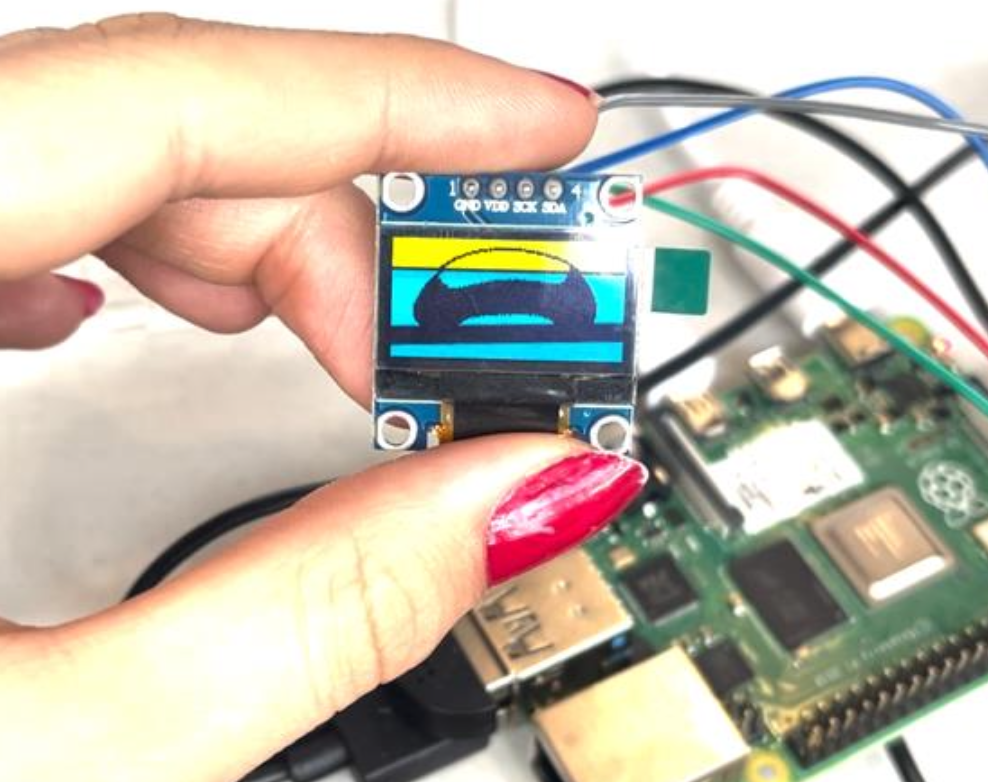

The next phase was building a physical prototype of Babbli. The main challenge was connecting components like microphones, speakers, and OLED screens through an Arduino board. After facing compatibility issues with the Arduino Leonardo, I switched to an Arduino UNO for easier setup. However, based on further testing and advice, I chose a Raspberry Pi instead, as it allowed Babbli to run independently without a laptop and offered more flexibility. On the Raspberry Pi, I set up the Python script, installed the necessary libraries, and integrated ElevenLabs’ text-to-speech API for natural, engaging voices. I then connected a speaker and microphone to enable auditory storytelling. To make the experience more immersive, I added a moving mouth animation on an OLED screen, synchronized with the narration through Python code.

The OLED screen initially had a yellow-and-blue color scheme, which wasn’t suitable for the toy’s design. I replaced it with new screens featuring more appropriate settings, and integration was successful. While early tests worked, the screen proved too small, so I ordered a larger version to improve visual engagement. I also updated the code to better synchronize mouth animations with the story narration, ensuring a cohesive user experience.

The laser cutting process was carried out using a design adapted from PDF to SVG, with adjustments for compatibility. The robot template, sourced from Etsy (KaBlackout), required manual fixes due to cutter malfunctions and imperfect cuts, but assembly was completed using glue stick and double-sided tape. I also created a logo with the Alba font, which unexpectedly resembled the “Barbie” logo—highlighting the need for more research on design references in the future. Despite challenges, the prototype was finished on time, demonstrating both the project’s feasibility and potential for future improvements.